Lost in Translation? The transition from ASCII to Unicode with UTF-8 bridging the gap

3 minute read

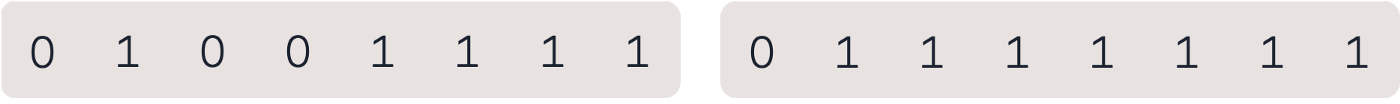

Whenever we interact with our computers or smartphones, an instant and invisible translation happens. When we enter our username in an app, when we make a bank transfer, or when we search for and eventually buy stock, we type in characters that need to be translated into computer language for the computer to understand us — and vice versa. For example, when we type in the word »bank«, the computer reads the numbers »01100010 01100001 01101110 01101011«. Or more precisely, the computer reads these numbers, if it uses ASCII, the American Standard Code for Information Interchange.

ASCII: A simple system that translates characters into numbers

ASCII was invented in 1963 and back then, it was the most widely adopted system capable of translating characters humans can read into numbers computers can understand. Today, ASCII is still used around the world. For example, much of the SEPA banking infrastructure that we use every day is based on ASCII.

ASCII is a relatively simple system using a 7-bit (0XXXXXXX) format that is also called Base ASCII. In Base ASCII, the first number is always a 0 and is not used. In total, Base ASCII can represent 127 different characters following one simple but very crucial rule: One byte always represents one character. For example, the four characters in the word »bank« are translated into four bytes.

Because of its simplicity, Base ASCII was quickly adopted as a global standard format. It became so dominant that on March 11, 1968, U.S. President Lyndon B. Johnson ordered that all computers purchased by the U.S. federal government must support ASCII.

Way too few signs to communicate effectively and globally

But as the online communication became more prevalent, it became obvious that the 127 different characters Base ASCII could display would not work for everyone. For example, the German language with its Umlaute (»Ä« or »Ö«) needed additional numbers to be defined against »Ä« and »Ö«. As a consequence, Germany and lots of other countries began to use an extended ASCII version, which used the 8th bit to unlock twice as many possible characters (254).

However, this resulted in a problem: different countries now used different extensions. The great advantage Base ASCII once had was gone. Instead of a universal character set everyone agreed upon, there were now different conflicting ASCII versions, each of them with their own special characters. Lost in translation, so to speak.

Unicode — a universal AND MORE POWERFUL SYSTEM

The solution: Unicode. Invented in the late 1980s, Unicode is a universal language that is used all over the world. Unicode’s approach follows a more complex logic but is built on Base ASCII as its first 127 characters are equal. Instead of an 8-bit system, Unicode uses a 32-bit system, allowing many more letters (up to 2 million) to be represented. Unicode is constantly updated, features signs and characters from all over the world and still has enough space to include many more new characters or emoticons.

However, the rise of Unicode came along with another major problem. The primary rule of ASCII was no longer valid. The simple logic that one byte always represented one character was abolished, as in Unicode’s 32-bit system one character could be represent by up to four bytes, creating another major translation problem.

For example, imagine a website created in the 1980s with all the text written in ASCII. On this website, every single byte represents a single character — in the format 0XXXXXXX. Now imagine that the website host wants to integrate some emoticons using Unicode. If he would simply switch to using Unicode, the entire text (written in ASCII) would be displayed incorrectly. And there is a simple reason for that error: Unicode cannot know where one character stops and another one begins as, for example, two bytes could now be only one character in Unicode instead of two in ASCII. Lost in translation. Once again.

The superhero FINALLY bridging the gap: UTF-8

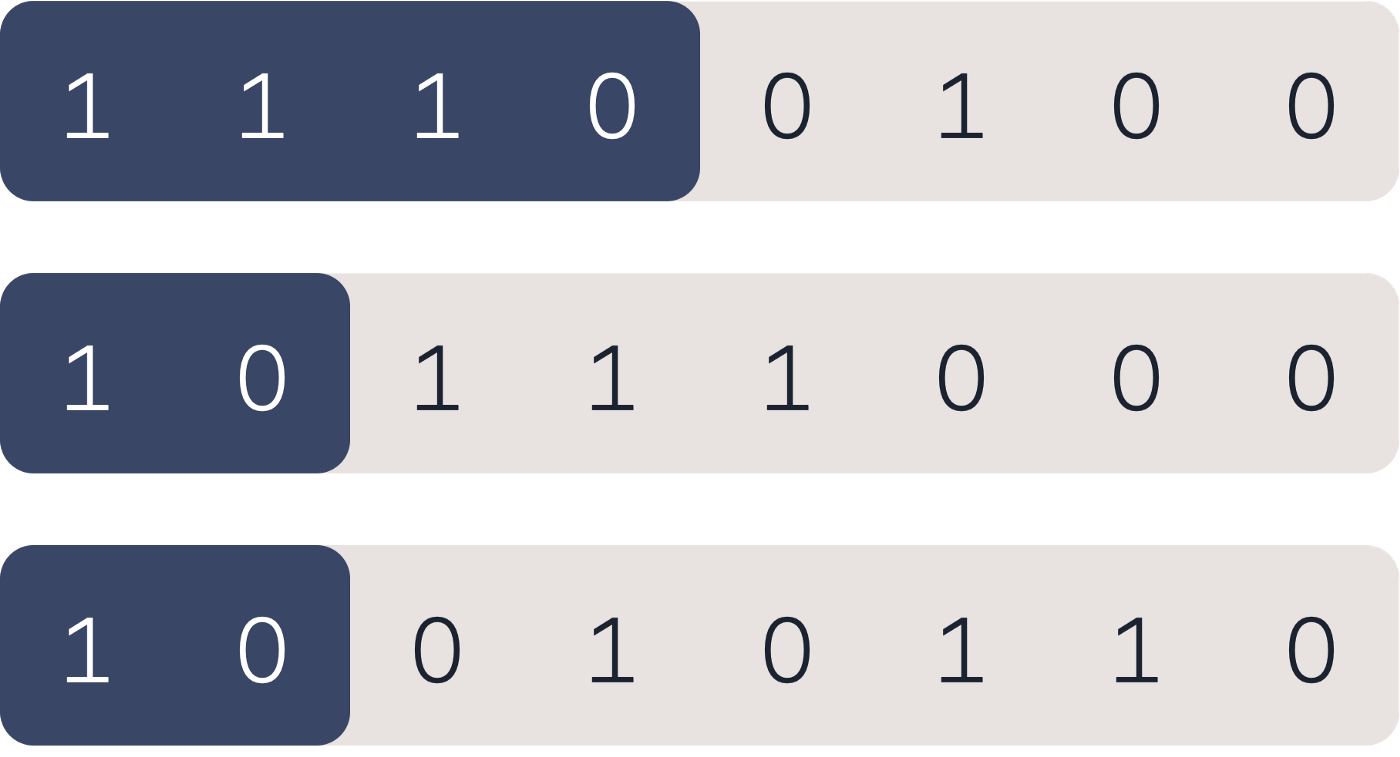

For this reason, a translator was needed and, thus, UTF-8 was invented. UTF-8 is the superhero bridging the gap between ASCII and Unicode. What is so special about UTF-8 is that it is not a character set or another language, but rather a set of rules that go on top of Unicode. Simply put, UTF-8 provides a set of instructions on how to recognize where a character starts and stops and how many bytes a character has. It does so by adding some extra information at the beginning of each byte. The primary rule behind UTF-8: when a byte starts with ‘0’, it should be read as a single character. When it starts with, for example, 1110XXX, it’s the start of a sequence of three bytes representing one character.

So, if a website, originally written in ASCII, wants to add some emoticons using Unicode, it can use UTF-8 and all the content written in ASCII would still look the same, because UTF-8 ensures that all ASCII characters (0XXXXXXX) are read as one single character.

To this day, UTF-8 is still very critical for the global compatibility of technology and different systems and devices as it bridges the gap between Unicode and ASCII. At Solarisbank, our entire system is Unicode and UTF-8 compatible to ensure that the banking transactions of all our customers and partners run smoothly.